Next: Prototype features

Up: Video Representation

Previous: Video Segmentation

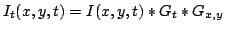

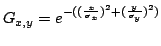

For each image frame in the video we

extract objects of interest, typically moving objects. We make no

attempt to track objects. The motion information is computed

directly via spatiotemporal filtering of the imageframes:

,

where

,

where

is the temporal Gaussian

derivative filter and

is the temporal Gaussian

derivative filter and

is

the spatial smoothing filter.

This convolution is linearly

separable in space and time and is fast to compute. To detect

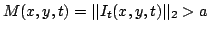

moving objects, we threshold the magnitude of the motion filter output

to obtain a binary moving object map:

is

the spatial smoothing filter.

This convolution is linearly

separable in space and time and is fast to compute. To detect

moving objects, we threshold the magnitude of the motion filter output

to obtain a binary moving object map:

. The process is demonstrated in

figure 2(a)-(b).

. The process is demonstrated in

figure 2(a)-(b).

Figure 2:

Feature extraction from video frames. (a) original

video frame from the card game sequence. (b)

binary map of objects (c) spatial histogram of (b).

|

|

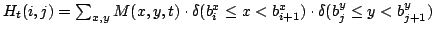

The image feature we use is the spatial histogram of the

detected objects. Let  be an

be an  spatial histogram, with

spatial histogram, with  typically equal to 10.

typically equal to 10.

, where

, where

are the boundaries of the spatial bins.

The spatial histograms, shown in 2(c), indicate

the rough area of object movement. Similarly, we can compute a motion

and color/texture histogram for the detected object using the

spatiotemporal filter output. As we will see, these simple spatial

histograms are sufficient to detect many complex activities.

are the boundaries of the spatial bins.

The spatial histograms, shown in 2(c), indicate

the rough area of object movement. Similarly, we can compute a motion

and color/texture histogram for the detected object using the

spatiotemporal filter output. As we will see, these simple spatial

histograms are sufficient to detect many complex activities.

Next: Prototype features

Up: Video Representation

Previous: Video Segmentation

Mirko Visontai

2004-05-13

![]() be an

be an ![]() spatial histogram, with

spatial histogram, with ![]() typically equal to 10.

typically equal to 10.

![]() , where

, where

![]() are the boundaries of the spatial bins.

The spatial histograms, shown in 2(c), indicate

the rough area of object movement. Similarly, we can compute a motion

and color/texture histogram for the detected object using the

spatiotemporal filter output. As we will see, these simple spatial

histograms are sufficient to detect many complex activities.

are the boundaries of the spatial bins.

The spatial histograms, shown in 2(c), indicate

the rough area of object movement. Similarly, we can compute a motion

and color/texture histogram for the detected object using the

spatiotemporal filter output. As we will see, these simple spatial

histograms are sufficient to detect many complex activities.