Testing your Tests

Tags: testingMay 29 2020

By Timothée Paquatte and Harrison Goldstein

This post introduces mutagen, a plugin to pytest that provides easy-to-use tools for mutation testing. We are still actively working on the plugin and figuring out what features it needs—if you would like to get in on the ground floor and have a say in the features that we build, get in touch! We can help you get started with the library and provide advice for how best to use mutation testing in your project. Open an issue on GitHub or send us an email.

Mutation Testing

Testing is hard. Sometimes no matter how much time and effort you put in, bugs still slip through the cracks. While this will always be true, there are actually ways of testing your tests to better understand how well they can find bugs in your code.

Mutation testing is a technique that involves intentionally breaking, or mutating, pieces of code. The tester runs the test suite on the mutated code, with the hope that the tests catch any bugs that were introduced by the mutation. For example, given the code below

def deduct_balance(acc, x):

if acc.balance < x:

return 0

acc.balance = acc.balance - xa tester might create one mutant where the balance check is inverted

def deduct_balance_m1(acc, x):

if acc.balance > x:

return 0

acc.balance = acc.balance - xand another with an off-by-one error

def deduct_balance_m2(acc, x):

if acc.balance < x:

return 0

acc.balance = acc.balance - x + 1and verify that the tests for deduct_balance fail when run with these incorrect implementations.

The (plausible) assumption here is that a test suite that catches intentionally injected bugs is more likely to also catch accidental bugs that may be lurking in the code – especially if the test suite quickly catches bugs due to a variety of mutants.

Introducing Mutagen

In the rest of this post, we give an example showing how to add mutants to an existing project; in

this case, we focus on adding mutants to a popular open source library called

bidict, using a plugin that we have written for pytest called

mutagen. Bidict introduces a bidirectional mapping data structure, which is used as a dictionary

that can be reversed.

The pytest-mutagen package provides two separate ways to introduce mutations into code:

Declare a mutation inline, using

mutfunction. For the example above, we might modify thededuct_balancefunction itself to look like this:def deduct_balance(acc, x): if mut("FLIP_LT", lambda: acc.balance < x, lambda: acc_balance > x): return 0 acc.balance = acc.balance - 1This would create the same mutant as

deduct_balance_m1above, assigning it the name “FLIP_LT”. Sincemutis a plain python function but we don’t want both arguments evaluated all of the time, we wrap both branches in “thunks”.Replace a whole function with another one, using the

@mutant_ofdecorator. Again for thededuct_balanceexample, we might define a mutant “ADD_ONE” that injects the off-by-one error fromdeduct_balance_m2:@mutant_of("deduct_balance", "ADD_ONE") def deduct_balance_m2(acc, x): if acc.balance < x: return 0 acc.balance = acc.balance - x + 1

You can find complete documentation in the README file of our GitHub repository.

Next we will step through the process of adding mutation tests to a codebase. These examples have been modified a bit for readability, but they are real examples pulled from a real open-source project.

Step 1: Try simple mutations.

Our overall goal is to find mutations of the source code that are not detected by the tests: if we

find one, this means that the test suite has some kind of gap that can be filled with new tests. For

this purpose, let’s mutate some functions in the simplest way possible: replace them with a pass

statement.1 Here the expected behaviors of update and __delitem__ are respectively

to update the dict with new (key, value) couples and to delete an item, but we replace them with

pass.

import pytest_mutagen as mg

from bidict._mut import MutableBidict # We have to import the class that we are

# going to mutate from the file bidict/_mut.py

mg.link_to_file("test_properties.py") # Specify the test file that will be run when applying the mutants

@mg.mutant_of("MutableBidict.update", "UPDATE_NOTHING")

def update_nothing(self, *args, **kw):

pass

@mg.mutant_of("MutableBidict.__delitem__", "__DELITEM__NOTHING")

def __delitem__nothing(self, key):

passThen we can run the mutations with

python3 -m pytest --mutate -q test_properties.py MutableBidict_mutations.pyand see whether or not the test suite catches them.

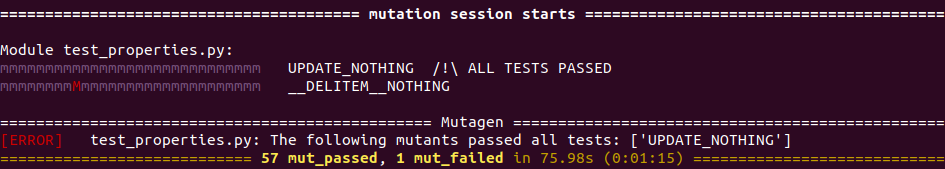

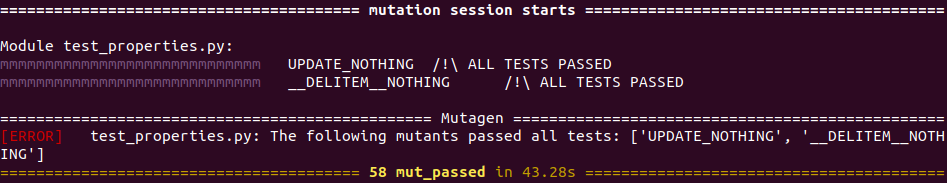

We can see in this picture that mutagen ran the 29 tests for each of the two mutants,

UPDATE_NOTHING and __DELITEM__NOTHING. In the top half of this picture, the outcome of each test

is represented by one letter. A capital red M means that the test failed and a lowercase purple

m means that the test passed. Therefore UPDATE_NOTHING is relevant because no test spotted it

and similarly __DELITEM__NOTHING only made one test fail.

Step 2: Focus on interesting mutants, make them more robust.

When a mutant is detected by one or two tests we can then find out why they fail and try to make the

mutant more subtle so the bugs that it introduces are no longer caught. For example in the case of

__DELITEM__NOTHING, we understand that the only failing test function expects an exception when

the item is not in the dict. Therefore if we replace the __delitem__ function with a function that

does nothing if the key exists and else raises a KeyError it passes all tests, even though it’s far

from its expected functioning:

@mg.mutant_of("MutableBidict.__delitem__", "__DELITEM__NOTHING")

def __delitem__nothing(self, key):

if self.__getitem__(key):

return

raise KeyError

We now have two undetected mutants! This means we need more tests.

Step 3: Add new tests to fill the holes.

The final step is to create new tests that catch the mutants. This is not as easy as it might seem, because simply writing a very specific test that catches one particular mutant is unlikely to make our test suite more robust. Instead, we need to fundamentally understand what holes in the test suite these mutants have unveiled and try to fill them in the most general way possible. Ideally, the tests that are added should fit with the other ones and be able to detect other mutants.

In our research group, we are partial to property-based testing (PBT), which works especially well with mutation testing (although unit tests are fine too). The idea behind PBT is pretty simple: rather than test particular cases, tests verify properties or invariants. For example, one property that might be useful for a dictionary is “a key that was just deleted should not be in the dictionary.” In Python this is possible with the help of the Hypothesis library, which introduces generators for different data types and runs each test function many times with generated inputs to validate the property.

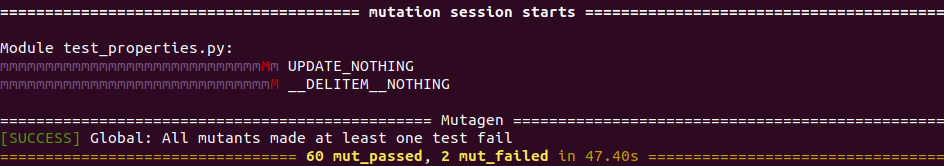

For bidict we added two correctness properties, and voila, now all the mutants are caught:

# Slightly simplified version of both properties, for readability reasons

@given(st.MUTABLE_BIDICTS, st.PAIRS)

def test_update_correct(mb, pair):

key, value = pair

mb.update([pair])

assert mb[key] == value

@given(st.MUTABLE_BIDICTS)

def test_del_correct(mb):

rand_key = choice(list(mb.keys()))

del mb[rand_key]

assert not rand_key in mb

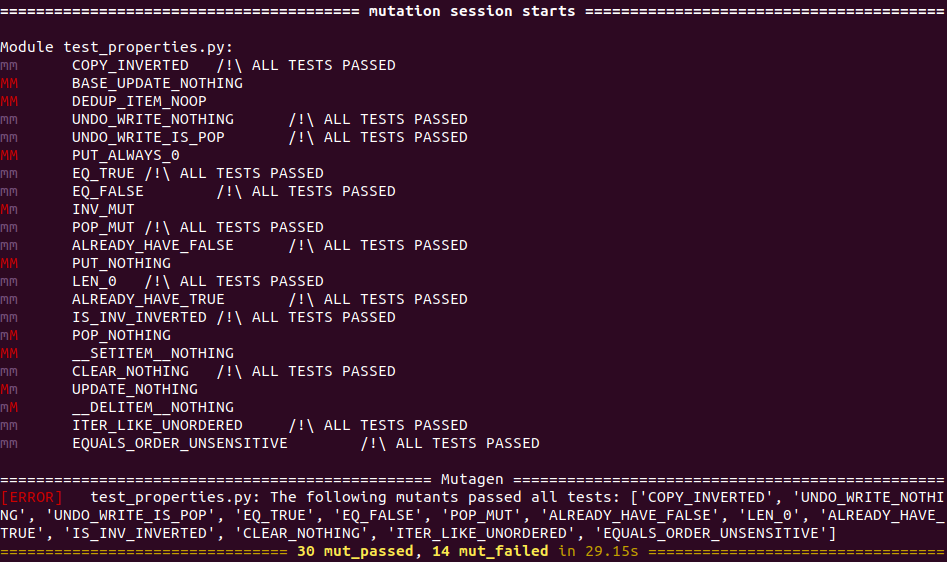

We can then run other mutants to check whether the properties we’ve added actually catch some of them:

Therefore even some simple correctness properties can catch several mutants!

On coverage

Often testers care about coverage: to what extent do our tests cover the functioning of each part

of the code? There are several popular code coverage metrics: function coverage, statement coverage,

condition coverage and so on. While working with bidict, we found a perfect example of the

limitations of traditional code coverage metrics. It turns out that bidict’s continuous

integration system keeps track of how new pull requests effect code coverage—after submitting our

PR, we received an email telling us that our PR did not improve code coverage (file, line, and

branch), which was already at 100%. We found this quite ironic, since our PR clearly filled a gap in

the test suite (for instance no test checked whether __delitem__ actually deleted the item or

not).

Accordingly, mutation testing can be seen as an additional coverage measure. Although it’s hard to quantify, it gives a powerful qualitative metric for finding holes in our test suites. Mutation coverage is a more abstract coverage notion, that takes into account the expected behavior of the code rather than simply it’s execution.

Conclusion

Mutation testing is a powerful tool for testing your tests. By introducing bugs intentionally and verifying that the test suite catches those bugs, testers can be more confident that their test suites are robust. Mutagen offers the first tool we are aware of for doing this form of mutation testing in Python.

We would like to develop the best tool possible, so we need input from real users. If you have a project that might benefit from mutation testing, create an issue on our GitHub page or send us an email. We will help get you up and running with mutagen, and we will take your workflow into account when developing features in the future.

Sometimes this will cause everything to break because the function is supposed to return something—in that case, rather than

passwe might choosereturn None,return 0, etc.↩︎