Developed by: Eric Eaton ()

at the University

of Maryland Baltimore County, Department

of Compter Science and Electrical Engineering

This educational software allows students to develop an intelligent agent for a search and rescue application in a partially-observable gridworld. It allows students to focus on high-level AI issues for solving the problem rather than low-level robotic navigation. It was used as the semester project for the Fall 2007 CMSC 471 Artificial Intelligence course at UMBC.

This software is free for non-commercial purposes. Please contact me if you would like to use any part of this software for commercial applications. Feel free to excerpt whatever you wish from this page for use in educational or course materials. However, please cite the software as:

Eric Eaton. (2008). Gridworld Search and Rescue: A Project Framework for a Course in Artificial Intelligence. In the Proceedings of the AAAI-08 AI Education Colloquium, July 13, Chicago, IL.Also, if you use this framework for your course project, please send me an e-mail letting me know. Also, if you wish, I can add your e-mail to a mailing list that I will use to announce bug fixes and new versions.

With the increasing nationwide concern with domestic disasters, there has been a surge in developing rescue mechanisms for victims of these disasters. One major recent effort includes developing autonomous robots that search through a disaster area for victims. These autonomous robots can search in areas that would be hazardous for rescue workers, aiding their search and possibly providing limited treatment to the victims.

Robocup rescue and the NIST Urban Search and Rescue competitions are two of the more popular forums for developing agents to solve this problem. However, the current simulators offered by these groups focus on realistic simulation, which focuses much of the initial development of an intelligent agent on low-level robotic hardware issues, such as driving in a straight line from one location to another.

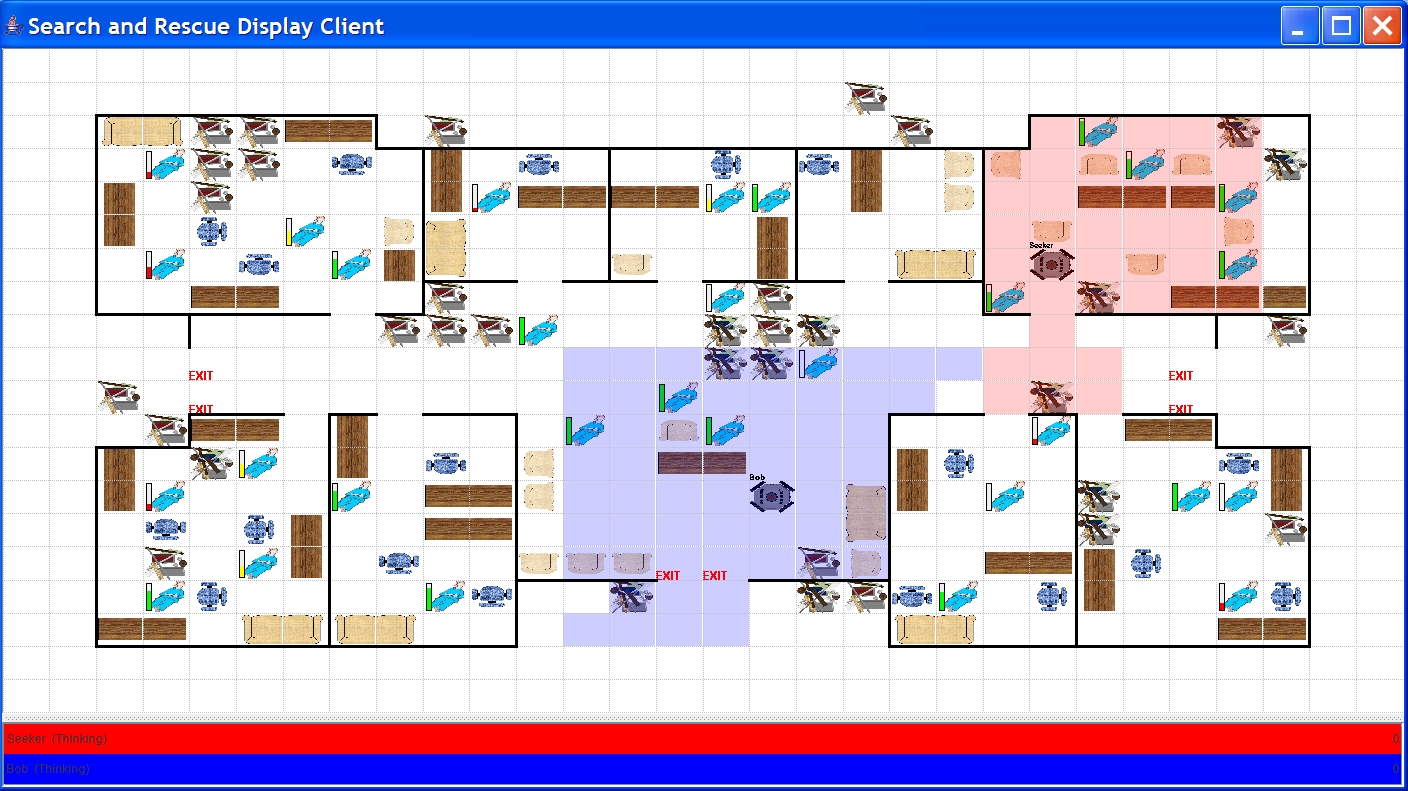

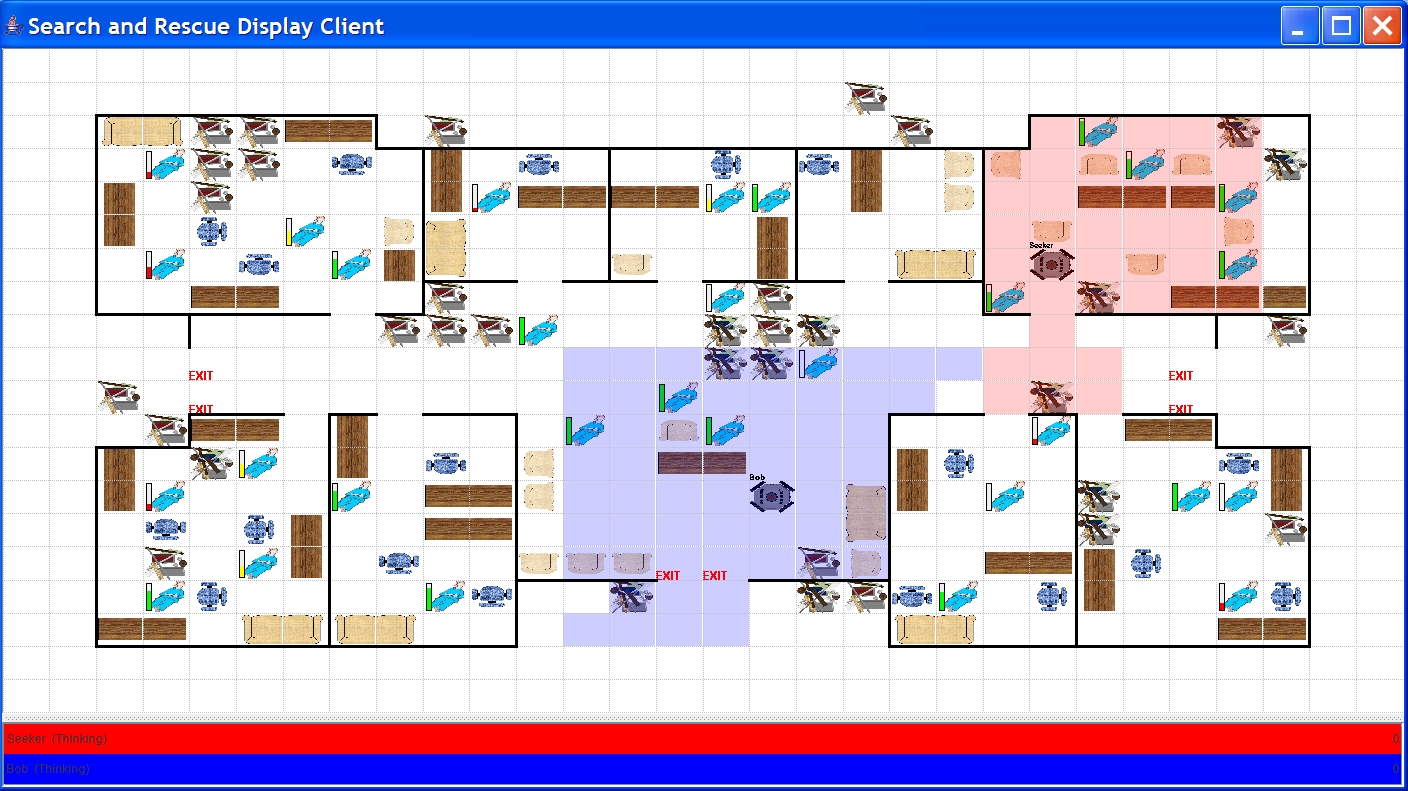

The Gridworld Search and Rescue simulator allows students to develop an intelligent agent to solve the search and rescue problem from a higher-level perspective, allowing them to ignore the low-level details and focus on applying artificial intelligence techniques to the problem. The simulator controls a 2D gridworld of the interior of a building struck by disaster. Several gridworlds are included in the distribution, and there is a map editor to facilitate the creation of new environments. The simulator supports multiple interacting agents and can be extended to support new features.

The intelligent agent's task is to search the building for victims and carry as many of them as possible to safety while they are still alive in a limited period of time. The intelligent agent will control a simulated robot with sophisticated sensors and effectors, including a long-range object recognition system, GPS, short-range medical diagnostic sensors, and accurate navigation. The agent will be preloaded with the structural plans for the building, but will need to explore the building for victims simultaneously with other competing agents. The disaster may have blocked certain areas of the building, so the agent must be robust to such changes. Additionally, the structural plans of the building include only the floorplan, so the agent should be robust to the location of objects within the building (such as desks and chairs) that may complicate navigation. The robot's long-range object recognition system and GPS will be useful in navigating the disaster-striken building.

The agent's primary objective is to locate victims and to carry them to one of the building's exits. Agents are only credited with rescuing live victims, so the robot's short-range medical diagnostic sensors may provide information useful for determining the status of a patient's injuries and predicting how long they will survive. The agent might use this information to triage the victims and determine the order in which to rescue them.

The specific project requirements are, of course, up to the individual instructor. However, if you would like to see example requirements, the project description used for the Fall 2007 Artificial Intelligence course at UMBC is available here: example project description. (Please note that some of the aspects of the simulator have changed since this description.)

The simulation server and visualization tool for the gridworld are both written in java, and require java 1.5 or later. The current version of the client library is written in lisp; however, we plan on including a java library in the future (it would be reasonably simple to create your own, if you so choose, since most of the necessary data structures are already implemented with the server). For this reason, it would be easiest for students to implement the intelligent agents in either java or lisp. All communication between the client and the server is in XML across network sockets, so you could implement your own client library in another language, should you so choose (if you do, I would appreciate it if you would contribute it to this project).

The simulator is divided into three parts: a simulation server, a visualisation tool that displays the gridworld during the simulation, and a client library for interacting with the server.

To prevent academic dishonesty, the software release does not contain the source code for the simulation server or visualization tool. However, I am happy to release the source code to any instructor wishing to use this in their course. Simply e-mail me, and I will send you the source code after verifying your status as an instructor at an academic institution.

I am currently in the process of developing the next version of this software,

which should include more features. If you are planning on using this software

soon, please let me know so I can give you the most recent development version. The java client library is only available in the development version, so you must e-mail me if you wish to use the java client. Version 0.05 below includes the lisp client.

To use the distribution, download and uncompress the archive available under Downloads. The simulator and display are both based in Java 1.5, so you will need java installed on the computer you use for development. For all of these commands to work, you must be in the top level folder for the files you just uncompressed. The software must be started in this order: display, simulation controller, and then any clients. Each component runs in its own terminal, so you will need to open three terminals. Below are instructions for starting each component.

Try not to modify any files included in the releases. This way, you can just unzip new releases directly into the same directory, overwriting older versions of the files. If you do this, be certain to backup your previous version. If you do need to modify any files, be certain to store copies outside of the directory so they aren't accidentally overwritten.

Note to Linux/Mac users: To make the example command lines below work on your operating system, don't put the classpath specification in quotes and use a colon to separate the jar files instead of the semicolon.

The display requires only a port argument, and you can optionally specify the size of the display (although, it is really unnecessary, as you can resize the window). Here is an example command that will start the display on port 2000:

java -Djava.security.policy=searchandrescue.policy -classpath SearchAndRescue.jar searchandrescue.display.Display 2000

The display and controller connect using java RMI, which requires a security policy defined in the file searchandrescue.policy. You may need to modify this policy file, depending on your system configuration and campus firewall.

The controller requires various configuration parameters, a port to listen on for clients, and a link to the display. Here is an example command that will start the controller on port 3000 using a simple gridworld with one client running for 50 timesteps (and processing the initial knowledge for 30 seconds) with a link to the display:

java -Djava.security.policy=searchandrescue.policy -classpath "SearchAndRescue.jar;jdom.jar;weka.jar" searchandrescue.Controller 3000 gridworlds/simple.gw 1 50 30 5 localhost 2000

The parameter that comes before the "localhost" controls the disaster severity. It ranges from 0-10 and controls the level of injuries of the victims. 10 results in an insane amount of injuries, and 0 corresponds to a very slight disaster which may minorly injure some victims. (I recommend setting this between 5 and 8 for a good search and rescue scenario.)

I've provided a manual agent that allows you to manually navigate around the gridworld. It requires a link to the simulator and the name of your agent. Here is an example command that will start the client from lisp.

(cd "lispclient") ; changes into the lispclient directory

(load "load-manual-agent.lisp") ; loads the manual agent

(main "localhost" 3000 "LispClient")

If you wish to have a custom graphic displayed for your agent, include the filename of that graphic file as the optional fourth argument to main. The display should support a number of image formats, but be sure to test your image to make certain that it works.

(main "localhost" 3000 "LispClient" "image.bmp")

There is also a java version of the manual client available as searchandrescue.ManualClient. They are very similar and require the same arguments (including the optional filename of the image for the agent). Here is an example starting the java manual client.

java -classpath "SearchAndRescue.jar;jdom.jar" searchandrescue.ManualClient localhost 3000 JavaClient

The simulated building lies on a rectangular gridworld with m rows and n columns. Each cell in the grid can be occupied by only one object at a time (with the single exception of your agent carrying another object, as described later).

The gridworld has cardinal directions north, south, east and west and an inherent coordinate system, with the south-west corner having coordinate (0,0). The x-coordinate increases from the origin for cells to the east, and the y-coordinate increases for cells to the north. All coordinates remain fixed throughout the duration of the simulation.

Each cell in the gridworld may have up to four walls, corresponding to each of the four directions. Objects cannot move through the walls and sensors cannot penetrate the walls. Walls cannot be demolished. For simplicity, the building does not contain any doors that require opening.

The agent starts at one of the building's entrances (assigned randomly) and must rescue victims by returning them to any of the building's entrances (for simulated pickup by rescue workers). The gridworld cells at the building's entry points are flagged with special markers, allowing the agent to detect them within long-distance sensor range. The building's outer walls lie inside the gridworld's boundaries, allowing passage outside of the building for the agent to move between entrances, if necessary.

At initialization, the agent will be given the size of the gridworld, the location of all walls of the building (the building's "structural diagram"), and the location of all building entry points. Note that the number and location of victims, the location of other agents, and the location of objects within the building are unknown to the agent at this time. However, it is known that no victim is outside the building (otherwise, rescue workers would have picked them up already).

While the agent knows the building's layout at initialization and can navigate roughly based on it, there may be objects within the gridworld that complicate the navigation paths. For example, a hallway may be blocked by debris, effectively acting as another "wall" in the building. The building will contain an assortment of standard objects (such as furniture, chairs, etc.), some of which can be moved by the agent and others of which cannot. Since only one object can occupy a gridworld cell, these objects will also complicate navigation for the agent. Moreover, since multiple rescue agents are present in the environment simultaneously, another agent may move an object during the simulation, making the location of these objects slightly dynamic.

Figure 1: A screenshot from the gridworld display showing a search and rescue simulation in-progress with two rescue agents. The colored areas depict each robot's long-distance sensor range. Victims with varying degrees of injury are scattered about the gridworld. The agents' goal is to rescue living victims by carrying them to one of the exits.

Your intelligent agent will control a simulated robot with sophisticated sensors and effectors. In keeping with a level of realism, the sensors cannot penetrate the walls, making the environment partially observable to the intelligent agent. Additionally, the rescue agent's effectors are constrained to a limited number of actions.

The rescue agent is equipped with the following sensors:

Long-range object recognition and localization sensors

The long-range sensors cover a rectangular range around the agent. This rectangular range covers four grid cells in all directions, forming a nine-by-nine square with the agent in the center of the square. Any object within this range will be identified by name and its precise coordinate location. Also, any object being carried by that object will be identified. Additionally, any walls within this range will be included. The long-range sensors cannot penetrate walls, so the covered area may be reduced depending on the agent's location and will not always be a complete rectangle.

These sensors are always active and provide information to the agent immediately following every action.

A short-range medical diagnostic sensor array

The short-range sensor array must be activated by the agent and provides information on the victim in the specified adjacent cell (north, south, east, or west only). This sensor characterizes the victim as a feature vector of real-valued data. This feature vector includes such information as the victim's heart rate, systolic blood pressure, diastolic blood pressure, respiratory rate, and other vital signs.

The simulator distribution includes labeled training data that students can use to learn a model to triage the victims and predict how long they will survive. The exact specification of the feature vector is available in this data.

This sensor is activated by the action sense and requires a direction {north, south, east, west} to specify the adjacent cell.

Self-feedback sensors

The self-feedback sensors provide information on the agent itself and any object it is carrying. This information will include the agent's current location, the current simulation time, and the name of the object it is carrying. Additionally, it will provide the status of the last action the agent attempted to execute, such as whether the action succeeded.

These sensors are always active and provide information to the agent immediately following every action.

The rescue agent is equipped with omni-directional wheels, allowing the agent to move in any of the cardinal directions immediately, and a lift capable of carrying an object. These effectors allow the agent to perform the following actions:

Move

The move action provides for basic navigation in the gridworld along the cardinal directions. It requires a direction {north, south, east, west} to specify where to move. The action may fail if it attempts to violate the rules of the gridworld, such as trying to move the agent through a wall or to a cell already occupied by an object.

Pickup

The pickup action allows you to pick up an object in an adjacent cell and carry them with you as you navigate the gridworld. It requires a direction {north, south, east, west} to specify the adjacent cell. The robot is not able to pick up all objects, so the move may fail. Also, the agent can carry only one object at a time. Obviously, the pickup action cannot work through walls.

Dropoff

The dropoff action is the opposite of the pickup action: it drops the object your agent is carrying into the specified adjacent cell. Like pickup, it requires a direction {north, south, east, west} to specify the adjacent cell. The dropoff action cannot work through walls or when another object is occupying the target cell.

Sense

The sense action activates the short-range medical diagnostic sensors on an object in the specified adjacent cell specified by a direction {north, south, east, west}. The feature vector response from the sensor is returned to the agent as the status of this action. Recall that the short-range sensors cannot penetrate walls.

No-Op

Additionally, there is a noop action available to the agent, which specifies that the agent will perform no action this timestep. Trying to execute an invalid action (one that doesn't exist) will default to a noop.

Like victims in the real-world, the simulated victims each behave differently. They are autonomous and move around the environment. Less-injured victims may move around quite a bit.

Also like victims in the real-world, the simulated victims may die. They may be dead at the start of the simulation or may die during the simulation. The intelligent agent has access to victim vital signs and diagnostic information through its short range sensors; this data might be useful in triaging the victim and predicting how long the victim will live.

Victims in general "want" to be rescued, and will not move if they see a rescue agent. However, you may observe some victims exhibiting "scared" behavior and running away from the rescue agents. Such behaviors are not built into the simulation, but may be emergent.

Upon registration with the simulation, the agent will be given the initial information about the gridworld (including the gridworld size, wall locations, and the building exits). The agent will be given 1 minute to process this information before the simulation begins.

The simulation will run for a certain number of timesteps and then total the score for each agent. Each action is considered to take one timestep. I recommend making the simulation time sufficiently large, say 200-400 timesteps, depending on the size of the gridworld and the number of victims.

After initialization, at each simulation timestep, the agent will be presented with its current perception of the world. This perception will include data from the long-range sensors, and self-feedback. If the short-range sensors were activated the previous timestep, the result from those sensors will be available via the self-feedback. Once the agent is presented with the perception, it will have a limited time (one minute) in which to respond with an action that the robot should execute in the current timestep.

The simulation will proceed as soon as all agents have responded with their actions for the current timestep. However, if an agent does not respond back with an action within the limited time, that agent's action for the current timestep will default to a noop. Even if the agent chooses to execute a noop action, it should respond and explicitly execute that action so the simulation can proceed, rather than waiting for the timer to expire.

Obviously, if the simulation runs for a large number of timesteps and each agent takes the maximum amount of time to respond with the next action, the simulation will run for a very long time. Please keep this in mind and consider that your agent should return a new move within a few seconds under most circumstances.

It may be the case that multiple rescue agents choose to execute actions which conflict. For example, two agents may try to move into the same cell at the same time. In such a case, one agent's action will succeed and the other agents' conflicting actions will fail; this choice will be determined randomly.

Actions from a non-agent (such as a victim) will never conflict with any action from an agent.

Each agent will be credited with one point for each live victim it delivers to a building exit. Dead victims are not worth any points, nor are victims that rescue themselves by wandering to an exit.

At the end of each search and rescue episode (after the allotted number of timesteps have elapsed), the agent will be informed of its score. This feedback might be useful to reinforcement learning schemes or other AI techniques which involve performance feedback.

To implement your intelligent agent, follow these steps.

initialize() This function will be called upon registering the agent with the simulator. The return value does not matter. You do not need to override this function if your agent does not require initialization.

choose-action(perceptions) This function will be called during each perception-response cycle. It takes in a perceptions data structure which represents the agent's current perceptions. It must return an action data structure.

process-result(score) This function will be called at the end of the simulation to inform the agent of its score. You do not have to override this function if your agent does not need performance feedback. It takes in a number; the return value does not matter.

You can look at the manual-agent.lisp file if you would like to see an example implementation of these functions. Skeleton versions of these functions are also provided in the client.lisp file.

This section describes the programming interface that your code will use to interact with various provided Lisp data structures. These are all contained in the utilities.lisp file, so that is the only source code file you should need to read in detail.

This section provides an overview of the data structures defined in utilities.lisp. Additionally, many of the data structures have additional accessor functions that allow you to access structure members without calling the built-in accessors. For example, you can call the coordinates() function on a cell, an object, or an agent and Lisp will return the coordinates of the appropriate data structure.

There is also a global parameter *current_time* which is kept up-to-date with the current simulation timestep.

Before the simulation starts, the simulator sends the client initial information about the gridworld, including the location of walls and the various exits. The client code automatically processes this information into a set of data structures which your code can access. After it does this processing, it calls the initialize() function, allowing your agent to perform any initialization.

The wall locations and the size of the gridworld are efficiently represented as adjacency lists, making your implementation simpler. This allows you immediately know which coordinates you can move to from a location. The set of walls restricts movement in the gridworld, so this is a representation of the gridworld as a graph, with the vertices being coordinates and the edges providing coordinates that your agent can move between. The adjacency lists are stored in the global hash-table *adjacent_coordinates*, which maps from a given coordinates data structure to a list of coordinates which are adjacent to it. This adjacency information DOES NOT take into account coordinates which are blocked by objects in the gridworld. The following utility functions are provided for interacting with the map:

Locations on the gridworld can also have various markers (which are represented as strings) which identify specific locations. Initially, the only marked coordinates are the exits. These coordinates are marked using the string "EXIT" by the simulator. During the course of the simulation, you can add your own markers to specific locations (for example, your agent could mark locations which are doorways). Markers are stored in the global hash-table *marked_coordinates*. The following utility functions allow you to interact with the map markers:

The choose-action(perceptions) function that you need to override while implementing your agent takes in a perceptions data structure that contains the agent's perceptions. While you can directly interact with this data structure, we've provided several utility functions that might make your job easier:

Thanks to Aaron Curtis for additional coding on the lisp client and the display, and development of the map-editor. Thanks also to the students of the Fall 2007 CMSC 471 course at UMBC for their feedback and suggestions on the project.